Just as we had to deploy application transformation management in Step 3, here we’ll implement and maintain data transformation management.

Now that the applications have been stabilized on a unified technical platform, their extraneous objects have been removed, and application transformation management has been installed, we’re ready to perform the bulk of the work to conform the seven different yet similar database schemas into a single, fully-normalized design along with a single MS Access application.

Steps 1-3 are all infrastructure orientated. During Step 4, new database application enhancements, required by the business, may be developed.

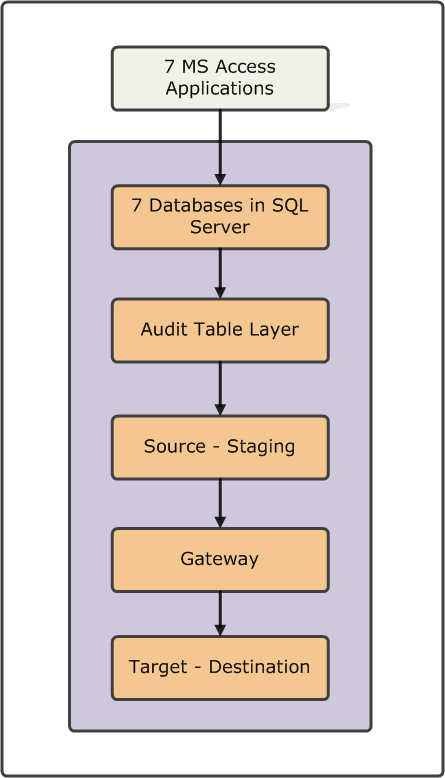

Conceptual Solution Architecture

Data is edited through the seven MS Access applications which are linked seven database schemas on the SQL Server. Each applications’ creates, reads, updates, and delete (CRUD) operations inserts a new record into the corresponding audit table, which employs an effective dating algorithm. The staging environment extracts only the current records from the audit table layer and inserts them into the staging environment. The gateway performs extract, transform, and load (ETL) operations against the staging tables and inserts and updates the target destination tables. The frequency of gateway/ETL operations determines the latency between the source and target tables and may be adjusted.

Detailed Description of each object in the step 4 conceptual solution architecture

Seven MS Access Applications

The client’s original MS Access application forms and reports, with the metadata columns are added in Step 2.

Seven Database Applications on SQL Server

The client’s original database tables migrated to SQL Server with the supporting metadata columns are added in Step 2.

These database tables will be consolidated over time and conformed by the ETL process within the gateway, until all data resides only in the target tables, and all applications have been consolidated into a single, master MS Access application linked to the target tables.

The audit table layer requires its source to support database triggers and other high-performance Relational Database Management System (RDBMS) features not found within the MS Access technical platform, which is why the tables are moved to SQL Server.

Audit Table Layer

The audit layer is comprised of two types of objects: 1) tables to hold the contents of the CRUD operations, and 2) a set of read-only views that display only the current values for every record in the system.

Database Table Name / Audit Table / View

GasDB1Customer

GasDB1Customer_audit

GasDB1Customer_view

Every table in the SQL Server database instance has a sister audit table that is filled by a single insert for every CRUD operation. For example, if GasDB1.Customer table has 500, records and 250 records receive some sort of CRUD operation, then the GasDB1Customer_audit table will contain 750 records. Every audit table uses an effective dating algorithm to keep track of the history of a record. This is performed by adding three new columns to every audit table: 1) effective_start_datetime, 2) effective_end_datetime, and 3) current_YN. In this example, only 500 of the 750 records in the audit table will have a current_YN=’Y’, while 250 records will have the current_YN=’N’.

When a record is first created at Time1, its effective_start_date = sysdatetime, while its effective_end_datetime will equal something like 1/1/2050 12:00. One second later, another CRUD operation occurs against the same record located in the seven databases in the SQL Server layer, and a new record is inserted into the corresponding audit table at Time2 with the default datetime, but then the previous record’s effective_end_datetime is updated from 1/1/2050 12:00 to sysdatetime.

Time1: INSERT GasDB1.Customer.Name = ‘Fred’.

Audit table: INSERT GasDB1.Customer_audit.Name = ‘Fred’,

effective_start_datetime=’201509210929′,

effective_end_datetime= ‘205001011200’,

current_YN = ‘Y’.

Then, one second later…

Time2: UPDATE GasDB1.Customer.Name = ‘Fredrick’,

Audit table: UPDATE GasDB1.Customer_audit WHERE Name = ‘Fred’

SET effective_end_datetime= ‘205001011230’

current_YN = ‘N’.

Audit table: INSERT GasDB1.Customer_audit.Name = ‘Fredrick’,

effective_start_datetime=’201509210929′,

effective_end_datetime= ‘205001011200’,

current_YN = ‘Y’.

The above describes how records flow into the _audit tables to form a history for every record change. The _audit tables allow us to answer the question: What was the state of every single record in the system given a particular point in time, say, 11:59am yesterday. Data consistency across all database tables becomes very important when pulling the data into the ETL process.

The _views, are stored queries written against the _audit tables that also leverage the effective dating algorithm to display only the most current instance of a given record. Simply put, WHERE (table_name)_audit.current_YN=’Y’.

One benefit to this design is that the operation of refreshing stage from the audit layer, as opposed to directly querying the seven live SQL Server databases, this design insures production performance will not impacted negatively by buffering all the records into the audit layer. Without the audit layer, a large data pull directly against the seven live SQL Server database would adversely effect the production application’s performance by potentially blocking or locking a user’s access to the database tables during the data pulling operation.

Please note that no applications are allowed to access the audit table layer directly.

Source – Staging

As often as is necessary, the staging environment is refreshed with data from the audit layer.

Stage consists of a single snapshot of all seven database tables at a single point in time. No database applications have access to the staging environment; its sole purpose is to be the source for the forthcoming ETL operation.

Gateway / ETL

The gateway is where ETL (extract from source, transform, and load into the target tables) occurs. Application and data transformation management is tightly coupled within the ETL logic. As an application change or database change occurs to consolidate or conform, the ETL is adjusted, and the applications are modified for the next release. Application transformation management is controlled by updates to the usys_user_role_object_rights table.

A source-to-target data mapping document is developed to aid in the communication and vetting process between IT and business subject matter experts (SMEs).

For the CPUC project, we’ll implement the ETL described within the source to target data mapping document in Microsoft SQL Server Integration Services (SSIS).

Target – Destination

The target is a fully normalized database schema in which all seven database architectures have been consolidated and conformed into a single source. Over time, the source will contain fewer and fewer tables and the target will contain more and more tables, until all the tables and the applications are consolidated into a single application connected to only tables in the target schema, and the ETL jobs will be turned off. This is an ideal state.

The overall conceptual solution architecture will continue to be leveraged after the seven MS Access database applications have been consolidated, and other legacy database applications that are good candidates for nurturing to a common technology stack will be managed through this established transformational process.

Example of some of the physical solution artifacts within Step 4

Source-to-target data mapping spreadsheet

ETL Job – SQL Server Integration Services – (SSIS)

An extract, transform, and load (ETL) job is a physical implementation of the above source-to-target data mapping spreadsheet. This is just one of many ETL jobs required to conform the seven MS Access databases into a single, normalized schema.

During the initial iterations through step 4, data that is unconformable systematically (out of bounds) requires data cleanup application screens to be developed to aid the department’s subject matter exports in the processing of cleansing and conforming the data.